The application of AI techniques to run autonomous enterprise business processes has experienced a resurgence since the last 'ÁI winter.' This new-found interest in 'ÁI Enable' the enterprise workflows is triggering the evolution of brand new business models across industries. This interest in AI enablement will offer businesses as many new opportunities as it does challenges.

Today's successful data-guided enterprises deal with a mass of digital information—the source of new economic value and innovation. The success is determined by how well they ferret out compelling insights from this big data.

The brute-force approach to unearthing the underlying hidden patterns inside complex data is unsuitable for data-driven decision-making. The current wave of advances in machine learning and artificial intelligence gives data-guided businesses a significant leg up—they use intelligent machines to make cheap, accurate predictions. Prediction is a central input into decision-making in an autonomous enterprise workflow.

Simply put, enterprise workflows turn inputs into outputs—and multiple tasks make a workflow. The unit of AI workflow design is 'The Task.' A collection of decisions produce a task. Task performance improves if decisions use accurate predictions made from data.

During the 'business process reengineering' era—to use the new general-purpose technology—computers—businesses relooked at their enterprise workflows and adjusted them for the 'reengineering' based on their objectives achieved. AI, too is a general-purpose technology like computers. Similarly, we need to step back and carefully deconstruct the workflows and identify the tasks where AI enablement has a beneficial role. We need to rethink the process. 'Throwing some AI' at a problem or into an existing process will not help.

As test leaders, we are interested in how AI can lead to a fundamental change in the software testing process. This article aims to deconstruct the software testing process and find ways to apply AI techniques to perform intelligent and optimal testing.

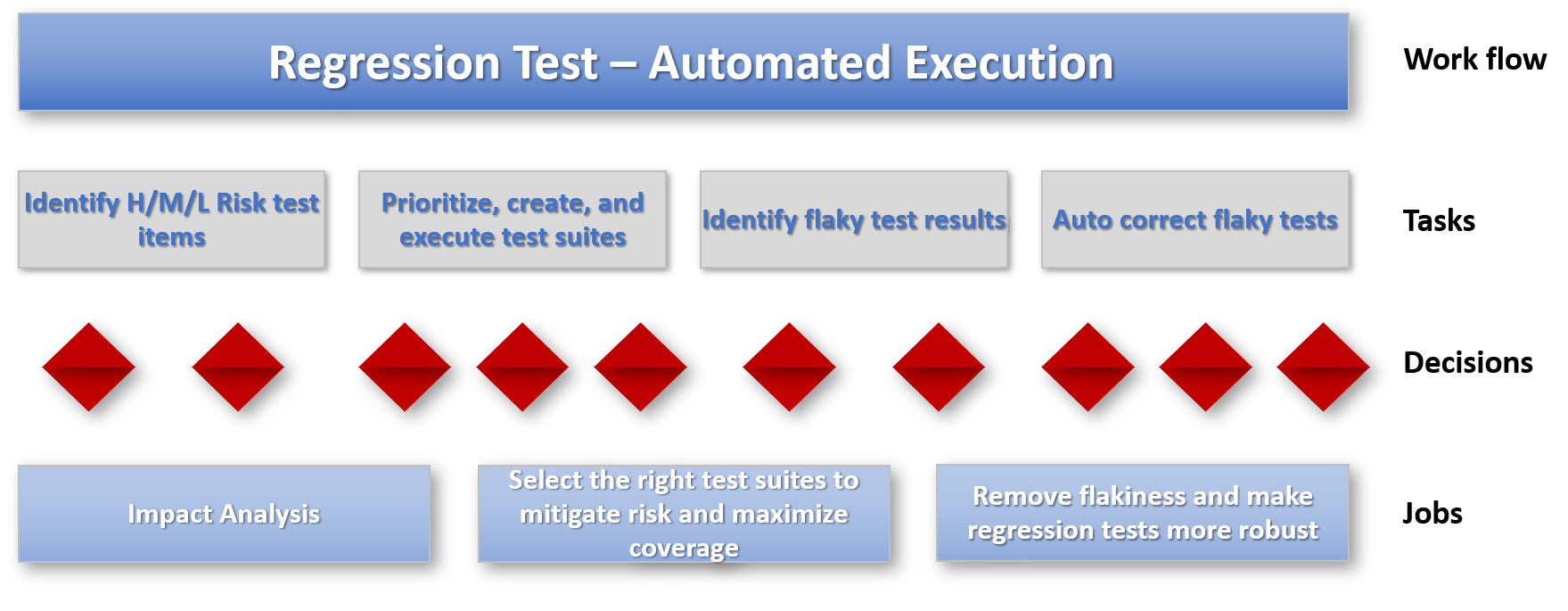

Consider the process of 'Automated Regression Test Execution and Result Analysis' for the AI enablement. The test team needs to perform the following jobs to achieve this workflow.

- Perform 'impact analysis' based on the changes.

- Select the right test suites that will mitigate the risk and maximize the coverage.

- Identify the 'Flaky' test results, correct the flaky tests, and make the test suites more robust.

We need to closely examine this sub-process and break it down into its constituent tasks predicated on decisions that AI tools will significantly enhance. There are many opportunities in this workflow to use machine predictions to improve decisions and gain benefits by more efficiently running this sub-process. Let us look at one such prediction that can help us decide whether a test result is 'Flaky.'

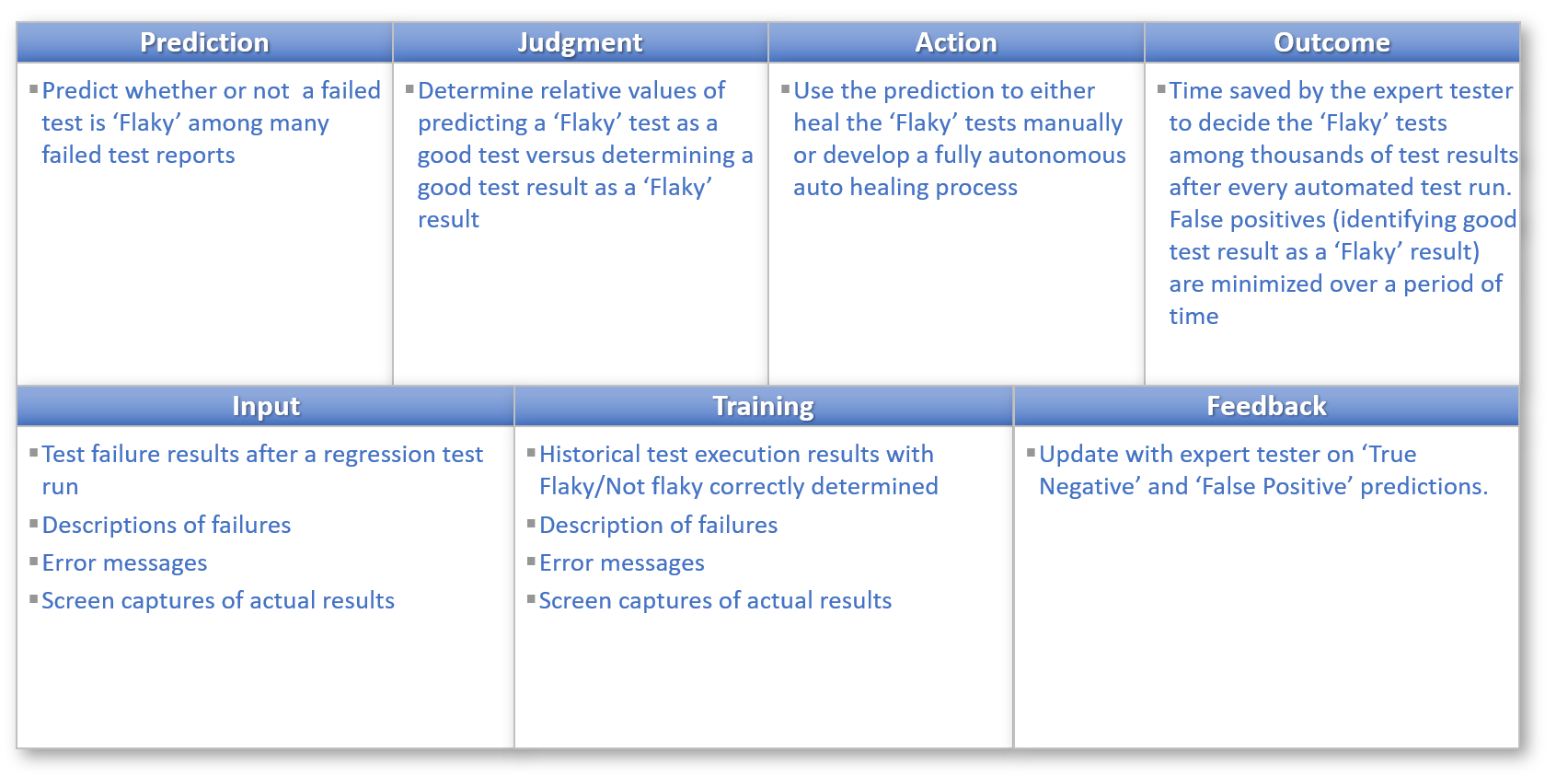

The next step is to decompose each decision into its constituent elements.

Ajay Agrawal, Joshua Gans, and Avi Goldfarb have introduced a simple decision-making thinking tool: the AI Canvas in their book 'Prediction Machines: The Simple Economics of Artificial Intelligence.'

Let us use the AI Canvas to detail the decision elements to determine whether the failed test is due to a 'Flaky' test. If the reason is 'Flakiness' in the test, we will attempt to correct the test before rerunning it; otherwise, we will flag a defect. For example, here is how we use this thinking tool.

ACTION (What are you trying to do?): Use the prediction to either heal the 'Flaky' tests manually or develop a fully autonomous auto-healing process

PREDICTION (What do you need to know to make the decision?): Predict whether or not a failed test is 'Flaky' among many failed test reports

JUDGMENT (How do you value different outcomes and errors?): Determine relative values of predicting a 'Flaky' test as a good test versus determining a good test result as a 'Flaky' result

OUTCOME (What are your metrics for task success?): Time saved by the expert tester to decide the 'Flaky' tests among thousands of test results after every automated test run. Ensure to minimize 'False Positives' (identifying a good test result as a 'Flaky' result) over some time.

INPUT (What data do you need to run the predictive algorithm?): Test failure results after a regression test run, Descriptions of failures, Error messages and, Screen captures of actual results

FEEDBACK (How can you use the outcomes to improve the algorithm?): Update with the expert tester on 'True Negative' and 'False Positive' predictions and continue to retrain the model

Conclusion: AI techniques can significantly benefit the software testing process if we take a step back and carefully deconstruct the testing process and identify the tasks where critical decisions can be made on prediction and judgment and informed by data.